Integrating Graduate Attributes with Assessment Criteria in Business Education: Using an Online Assessment System

Darrall Thompson

University of Technology Sydney

Darrall.Thompson@uts.edu.au

Lesley Treleaven

Patty Kamvounias

Betsi Beem

Elizabeth Hill

The University of Sydney

Abstract

This paper describes a study of the integration of graduate attributes into Business education using an online system to facilitate the process. 'ReView' is a system that provides students with criteria-based tutor feedback on assessment tasks and also provides opportunities for online student self-assessment. Setup incorporates a process of 'review' whereby assessment criteria are grouped into graduate attribute categories and reworded to make explicit the qualities, knowledge and skills that are valued in student performance. Through this process, academics clarify and make explicit the alignment of assessment tasks to learning objectives and graduate attribute development across units and levels of a program of study. Its application in three undergraduate Business units was undertaken as a collaborative action research project to improve alignment of graduate attributes with assessment, identification of assessment criteria and feedback to students. This paper describes the use of Review and presents an analysis of post-ReView data that has institutional implications for improving assessment and self-assessment practices.

Introduction

The term graduate attributes used in this paper is intended to include a broad range of personal and professional qualities and skills, together with the ability to understand and apply discipline-based knowledge. In this context, graduate attributes also subsume a number of terms used in different countries and levels of education such as ''key skills' (Drew, Thorpe & Bannister, 2002), 'generic attributes' (Wright, 1995), 'key competences' (Mayer, 1992), 'transferable skills' (Assiter, 1995) and the terms 'employability skills' and 'soft skills' that are increasingly popular in the business sector (BIHECC, 2007).

The Australian Government attempted to impose external validation of graduate attribute development in the Higher Education sector by introducing its own Graduate Skills Assessment test developed by the Australian Council for Educational Research. This was a two-hour multiple choice test and one-hour essay to be taken by students at entry and exit from their degree programs. This move was unsuccessful for a number of reasons (Thompson, 2006) but added to the impetus for universities to take a more serious approach to the validation of their graduate attribute statements.

The majority of Australian universities have engaged with the processes of graduate attribute development in recognition of their responsibility to equip graduates with the attributes needed for lifelong learning in a rapidly changing world and workplace. There is clearly an ongoing need in Business education to develop students' employability and in particular their awareness of ethical considerations, global sustainability and equity issues including intercultural sensitivity (BIHECC, 2007).

Institutional support for the integration of graduate attributes in teaching, learning and assessment processes has been patchy and not without problems (Hoban et al., 2004). Educational research (Barrie, 2004) supports the integration of these attribute developments with existing curricula rather than a 'bolt-on' approach through the addition of extra units of study. Barrie (2004) claims that:

It is apparent that Australian university teachers charged with responsibility for developing students' generic graduate attributes do not share a common understanding of either the nature of these outcomes, or the teaching and learning processes that might facilitate the development of these outcomes (p. 261).

Graduate attributes are often mentioned in curriculum documentation but the effective integration of these into developmental approaches in the classroom has been somewhat elusive. Steven and Fallows (1998) concluded that "students are concerned that success in a university course may not connect well with employment chances" (in Nunan, 1999, p. 3) and that "there are two recurring arguments for the emphasis on (generic) skills: students need these skills to succeed in their academic work, and graduates need these skills to get jobs" (in Nunan, 1999, p. 8).

Alignment of graduate attributes with assessment processes also appears to be minimal. Given that "assessment is the single most powerful influence on student learning in formal courses" (Boud, Cohen & Sampson, 2001, p. 67), graduate attribute statements cannot be considered valid unless assessment criteria are explicitly linked to attribute development. Research also indicates that 'ticking boxes' and mapping graduate attributes only to learning objectives or goals often results in "a representation of the teachers' perspective and expectations, and may not be aligned with what the students both experience and perceive in terms of their development of graduate attributes" (Bath et al. 2004, p. 325). The lack of viable processes with which to engage staff in linking assessment to attributes led to the development of an approach using an online assessment system and the study described in this paper.

The Business Graduate Attribute Context

Recognition of the need for action in the Business education context is reflected in a report by the Business Council of Australia (2006). The report suggests that universities should be teaching critical thinking and ethical approaches together with the practical skills needed for employment. Further, the report suggests that such development become a core focus for both university undergraduate and postgraduate courses, noting:

Companies were concerned that education and training systems were not providing people with appropriate skills in areas that were increasingly vital in creating the type of workplace culture in which innovation thrives. In particular, a number of companies noted that management education was focussed on finance and marketing but was not providing graduates with the 'soft' skills such as teamwork, that enabled innovative use of these capabilities (p. 25).

An Australian Industry Group report (2006) describes the outcomes of a survey of over 500 employers, stating:

They [employers] are demanding higher levels of skills, frequent updating of skills and excellent 'soft' skills as well as technical skills. Over 90 per cent [of employers] look for people who are flexible and adaptive, willing to learn on the job, team workers, technically competent and committed to excellence (p. viii).

In particular, the need for communication skills and teamwork skills that contribute to productive and harmonious relations, and problem-solving skills are highly sought for productive Business outcomes.

In addition, many Australian Business schools have obtained, or are seeking, international accreditation such as EQUIS (European Quality Improvement System) or AACSB (Association to Advance Collegiate Schools of Business). The quality assurance process of AACSB, for example, requires each degree program to specify learning goals and demonstrate the student's achievement of learning goals for key management-specific knowledge and skills.

Having introduced the general case for a focus on graduate attributes and, more specifically, the need to address their development in the Business Higher Education context, the following sections describe our research approach using an online assessment system as a catalyst to the integration of graduate attribute assessment in an Economics and Business school.

Graduate Attributes and Assessment Criteria

Attempts to integrate graduate attributes into teaching and assessment have met with responses ranging from reluctance and resistance to full adoption (Rust, O'Donovan & Price, 2005). Student reluctance is understood in terms of their central focus on practical and technical skills for entry into employment. On the other hand, academics' resistance is understood in terms of their expectations that assessment is based only on discipline-specific content and that assessment of 'additional' attributes is a distraction or unnecessary extra work. Additionally, lack of awareness about graduate attribute development across a degree program further reduces the concern to integrate graduate attributes at the individual unit of study level (Harvey & Kamvounias, 2008).

However, there is substantial evidence not only that assessment drives learning (Ramsden, 2003) but also that assessment processes and student learning can be enhanced through a social constructivist approach (Rust, O'Donovan & Price,2005). In such a constructivist approach knowledge about assessment processes, criteria and standards is developed through the active engagement and participation of both students and their Business educators (Kember & Leung, 2005).

This paper describes an approach to the integration of graduate attributes with assessment criteria using an online system (ReView) to engage academic staff though constructive alignment (Biggs, 1999) and students through self-assessment.

The Study Site and Research Methodology

A pilot study in Business disciplines was initiated at the University of Sydney in the Faculty of Economics and Business with three lecturers, an academic consultant (designer of ReView) and the Faculty's senior academic adviser. Each of the lecturers brought a track record of innovation and commitment to pedagogy, demonstrated by their appointment as Faculty Learning and Teaching Associates for their disciplines. The three units of study were drawn from undergraduate Business Law, Political Economy (Honours), and Government and International Relations. Each unit had the same lecturer coordinating both Semester 1, 2006 and Semester 1, 2007.

Given the limited success of graduate attributes integration, despite earlier attempts in the Faculty (Harvey & Kamvounias, 2008), the project aimed to investigate whether use of an online assessment system (ReView) could:

- facilitate the alignment of graduate attributes with intended learning outcomes, teaching and learning activities, assessment tasks and assessment criteria and

- facilitate students' engagement with assessment processes and reflection on their attribute development.

A collaborative action research methodology (Treleaven, 1994) was employed that facilitated iterative processes of development in the design of individual Units of Study as well as collaboration as a project team in the design, implementation and evaluation of all three Units. Individual consultations were conducted by the academic consultant with each lecturer. Reflective meetings of the project team in which collaborative learning and organisational learning were generated, taped, transcribed, circulated and reviewed. The academic adviser's role was to manage the project including the research design, ethics approval, data collection and analysis, and preparation of journal papers for publication.

Three artifacts were available to support and focus this integration of graduate attributes work: the Faculty's Unit of Study outline (http://teaching.econ.usyd.edu.au/UoS/) with supporting guidelines and examples, the online assessment system of ReView, and student feedback given on the University's Unit of Study Evaluation at the end of the semester - http://www.itl.usyd.edu.au/use/use_ncs.pdf. The study commenced with each of the three lecturers reviewing their unit of study in consultation with the ReView designer, to assist them to align and integrate the University's graduate attributes with the learning goals of their unit by developing and refining assessment criteria for each task.

Two sets of data were collected: one set post-ReView and another set for comparative pre/post ReView analysis. The post-ReView data included a student survey, self-assessment data and student evaluation (USE) data, both quantitative and qualitative from each of the three units. The comparative data included the unit of study outlines and the student evaluations (USEs) from the previous year before engagement in the ReView process. The comparative data is used principally in a further paper that critically investigates the catalytic change processes of engaging academic staff in the project.

Alignment of Graduate Attributes with Assessment Criteria

The online system used in this study is currently in development at the University of Technology Sydney and implemented in the School of Design. ReView provides students with online criteria-based tutor feedback on assessment tasks and the opportunities for online student self-assessment.

The process of 'review' for the three lecturers involved was conducted through a number of meetings and included a series of steps to facilitate the integration of attributes. Assessment criteria were:

- identified from a broad range of qualities, knowledge and skills reflecting the intended learning outcomes of each task contextualised within the unit of study, including discipline-specific content.

- made explicit through careful wording of the levels to be achieved in order to improve students' engagement with and understanding of the intended learning outcomes.

- then grouped under the generic attribute categories used at The University of Sydney. All assessment criteria were coded using the same five categories, so that students could gain feedback about their development of these generic attributes progressively through a unit of study and across unit boundaries through their program of study.

- weighted equally, with finely grained criteria added when a greater emphasis on particular intended learning outcomes was required.

- worded to assist students who opted to self-assess their own work against the assessment criteria to enhance their learning and attribute development.

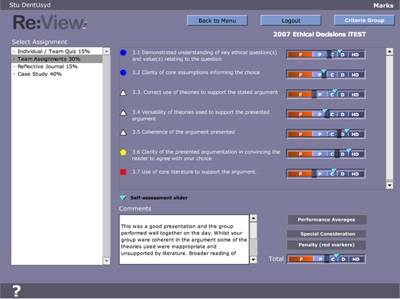

Figure 1: ReView screenshot showing a student view of the feedback screen

By colour coding each criteria to an attribute category, students are able to view pie charts of the proportion of assessment criteria that relate to attribute categories for each assessment task in the unit of study. At The University of Sydney, there are five categories attribute categories: Personal and Intellectual Autonomy; Research and Inquiry; Information Literacy; Communication; and Ethical, Social and Professional Understanding. Definitions of the categories were made available by clicking on the 'Criteria Group' button at the top right of all screens.

Assessment Criteria and Self-assessment

Having engaged in the 'review' process, the three lecturers taught the revised units of study in Semester 1, 2007. Students were given a printed copy of the revised unit of study outline; staff used the ReView tool for marking and giving feedback, and some students used ReView for self-assessment (optional).

Lecturers were given the choice of marking directly online using ReView's data sliders or using a paper printout to mark offline. Benchmarking was facilitated with a 'Total' data-slider that moves the individual sliders against each criterion and recalculates the task weighted marks, adjusting the total mark whilst keeping the marker's relative assessment of each criterion. This feature facilitates both granular judgments (through individual criteria) and a holistic judgment (through total assessment mark) made on the aggregate mark for a specific assessment task. As ReView is web-based, when the lecturer was co-ordinating the unit with tutors, it was possible to view tutors' marks and comments when they were being entered and moderate where necessary before marks were published for the students to access.

Where there was a large variation between a student's self-assessment and the marker's criteria gradings, the markers were able to use this variation as a basis for feedback in the online comment box.

At the end of semester, students completed the Unit of Study Evaluation (USE) and a paper-based or online survey pertaining to ReView and graduate attribute development.

Results

The two stated aims of this study were to investigate whether the use of an online system, in this case ReView, could first, facilitate the alignment of graduate attributes with intended learning outcomes, teaching and learning activities, assessment tasks and assessment criteria; second, facilitate students' engagement with assessment processes and their reflection on their attribute development. A third unstated aim was to improve the quality of feedback on assessment tasks and related therefore to the quality of student learning outcomes.

Alignment of Graduate Attributes

There is substantial evidence to argue that first, engagement in this study generated changes to teaching practice, particularly in the capability of staff to embed graduate attributes within assessment tasks and align them with learning outcomes; and second, that an improvement in aspects related to student learning outcomes was achieved.

This evidence can be triangulated from a number of sources. In the transcripts of the project team's reflections it is possible to see how ReView acted as a catalyst in this capability development and the alignment of graduate attributes with intended learning outcomes, teaching and learning activities, assessment tasks and assessment criteria. Furthermore, the Unit of Study Outlines for all three units demonstrated beneficial changes and improvements in terms of the criteria used by the Faculty in its annual audit of outlines, e.g. extent of alignment across the curriculum, provision to students of clear assessment tasks and criteria.

Positive student comments regarding the benefits of ReView in clarifying and aligning criteria and expectations of the unit were also noted on the student survey of ReView:

- I really liked how the criteria for assessment was made well known

- Clearly explained how the lecturer reached her grading conclusions

- Made clear expectations of course

Improved Assessment Feedback

The student evaluation scores (USE) relating to feedback on learning and overall satisfaction were high compared with Faculty averages. Feedback on assessment assisted my learning in this unit of study (Item 8 in the survey) showed substantial improvement across all three units with the level of agreement increasing by at least 12 percentage points to range 63-89%. The Faculty benchmark is 70% strongly agree/agree and a movement of 10% is generally viewed as a noteworthy achievement. Thus, this response to item 8 is strong data, especially when it is compared to the Faculty score of 59% on that item. It is the survey item most closely related to the functionality of ReView and suggests that the online system and changes that happened as a result of the 'review' process had significant impact on feedback assisting student learning.

This quantitative evidence of improvement in feedback on assessment is confirmed by analysis of students' qualitative comments on the 2006 and 2007 student USEs. There were many negative comments in 2006 which referred to lack of feedback. The comments in 2007 indicated that the students were both pleased with the feedback and received more feedback, specifically through ReView. In one unit of study, 100% of comments were positive in 2007.

Furthermore, student USE comments drew attention to the use of ReView facilitating feedback throughout the semester. Sample comments specifically mentioning ReView were:

- Great feedback system on Blackboard (online ReView)

- Review system lets people improve

- Review was great and in depth – not only gave a mark, but also in depth comment, class average and were scored in different components

- Peer evaluation and Review was good – enabling a mid-way evaluation

- Review program gave quick and clear feedback

- Mid-semester feedback and other feedback enabled improvement of marks

- The ReView program was very useful and indicated clearly the outcomes achieved (or not)

- The use of the electronic assessment form was excellent in communicating the reason for my mark

- Very detailed feedback, not just ticks on assignments was good

- Review and comments very thorough

Two cohorts completed the student survey focusing on their experience of ReView and graduate attributes at the end of semester. One survey was conducted with hard copy in class and the other electronically. The third, planned as an online survey was not conducted in a timely manner due to tutor illness. The results are therefore incomplete, not affording full analysis. However, it is demonstrable from this student survey that the experience with online feedback for assessment, using ReView, was a very positive experience for one of the cohorts; whereas for another cohort, that experience was not positive.

These negative comments from the one cohort were consistent with the USE quantitative scores which showed a slight decrease (-7%) in clear learning outcomes (from 98% to 91%) and overall satisfaction (-4 from to 97% to 93%) and generic attributes (-3% from 85% to 82%). Nevertheless, this cohort also reported increased agreement (+18% from 56% to 74%) agreement with feedback assisted me in my learning in this unit.

There were criticisms from both cohorts about the form in which feedback was given and the appropriateness of some of the criteria:

- It did not show the numerical mark (this point was reiterated by most of the respondents)

- Disliked lack of opportunity to give positive feedback. Feedback tended to be focused around points for improvement rather than identifying strengths

- Couldn't improve for next time because there would be no next time

- An example of where I was confused.....I was asked to assess myself for academic honesty in an exam. I didn't cheat, so do I ward myself a high distinction

- Also some of the assessment criteria can't actually be marked, for example being ethical should not be marked in terms of distinction or credit. It is actually quite ridiculous in reality

- Too subjective, never any real feedback, what is required is written words to explain a student's situation better

- Felt as though teachers had a mark in mind and then adjusted the scale to achieve this mark eg we were only given distinctions for honesty and integrity as a mark of 100 would have effected the total mark

These criticisms were also echoed in the 2007 USE evaluations, although comments expressing dissatisfaction with the ReView program itself were from one unit of study only. This feedback provided further insight into student dissatisfaction with receiving grades not actual marks on ReView.

The following comments are useful feedback on how the ReView functionality can be improved in the next iteration of software development:

- Review was difficult to use, hard to receive feedback on game throughout preparation stages

- I prefer to have a "numerical" mark on blackboard for my assessment instead of a "letter"

- The review system was not helpful to me. By week 13 we still had not received marks for some assessments

- I found the feedback for assessments adequate but found the review online system too complicated and fiddly to be effective

- The review system was useful, but I still prefer marks against a set of criteria

The points about receiving numerical marks are interesting. The reason for not giving percentage marks comes from educational research that suggests students are less likely to use formative feedback if percentage marks are displayed or written on assignments (McLachlan, 2006).This is a research question that can be addressed in future iterations of the study.

Students' Self-assessment

In regard to the students' engagement with assessment processes, there was strong evidence to suggest that students had a thoughtful and reflective approach to their own self-assessment and to their attribute development.

Student evaluation scores (USE) on The unit of study helped me develop valuable generic attributes (Item 3) improved in only one unit (64% - 88%). However, across all three units, scores now show a consistently high level of agreement for this item in 2007 (88%, 93% and 85%). This finding is important in the context of the Faculty's average of 69% level of agreement on this item and points to the potential for increasing staff capability with use of the ReView tool to bring graduate attribute development to the fore.

These quantitative results were supported by students' qualitative USE comments that indicate some students saw the assessment tasks as helping them to develop graduate attributes. Sample comments specifically mentioning ReView were:

- Using the review system showed us which parts we were lacking in and [need to] develop

- Review on [the] internet was very helpful, as was the detail in UoS handout

However, only one of the two cohorts who completed the ReView survey responded with comments that suggested development of their graduate attributes was facilitated by using ReView.

- You may identify an area of weakness (through self-assessing)

- Required us to self-reflect on our performance in assessments, unlike other units of study

- Helped discover weaknesses that I didn't realise I had

- Self-assessing was difficult at first though

- Made me understand where my thinking differed from the marker

- Good to see "performance averages" and "guess-timate" my marks before handing in work

Many of these comments relate to the self-assessment feature of ReView and as such are arguably evidence of development in the graduate attribute category of Personal and Intellectual Autonomy.

The evidence for students reflecting on their graduate attribute development is provided both quantitatively and qualitatively. Table 1 (below) shows the extent to which students self-assessed their work on ReView during the semester as a percentage of the actual and possible number of criteria self-assessed across the cohort. Between 58% and 64% of students assessed their work in two cohorts. One cohort (Unit 2), with the lowest percentage of students undertaking self-assessment, had many negative comments about the fact that ReView was designed to show grade indicators rather than percentage marks. It is possible that dissatisfaction with this feature of marking is reflected in the high percentage of students choosing not to self-assess.

Table 1: Self-assessment using ReView

Self-Assessment S1 2007 |

Unit 1 |

Unit 2 |

Unit 3 |

|

|---|---|---|---|---|

Students in unit |

Approx 20 |

Approx 60 |

Approx 160 |

|

Total number of criteria assessments made in this unit |

558 |

3546 |

1638 |

|

Self-assessed (%) |

64 |

15 |

58 |

|

Not self-assessed (%) |

36 |

85 |

42 |

|

Self-assessed criteria # |

359 |

538 |

954 |

|

Under rated >5 (%) |

39 |

20 |

25 |

|

Accurately rated +/- 5% (%) |

39 |

49 |

42 |

|

|

Same (%) |

9 |

12 |

10 |

|

Above (%) |

42 |

54 |

40 |

|

Below (%) |

49 |

34 |

50 |

Over rated >5 (%) |

21 |

31 |

33 |

|

Criteria assessed at ≥95% (%) |

1 |

1 |

3 |

|

About 40% of students self-assessed their work to accord with that of the tutor or lecturer's marking on the criteria. Approximately a third of students underrated or overrated their performance against criteria, and very few gave themselves 95% or higher as a mark on any criteria. This spread of self-assessment may indicate that students were reflecting thoughtfully about their learning in these units.

Conclusions

Whilst there is strong evidence that the online system was effective in facilitating the integration of graduate attributes in assessment criteria, there are three main areas highlighted for consideration and further study.

The first area relates to the main aim of the study regarding alignment of graduate attributes in curricula. In assisting academics' development of attribute-coded assessment criteria it will be necessary to develop an online wizard and database to assist lecturers in their formulation of appropriate criteria as the process is otherwise difficult without one-on-one support. The graduate attribute categories need to be clear and well differentiated. In the case of The University of Sydney, two graduate attribute categories, Information Literacy and Communication, are not widely understood and require classification. There is not a shared set of meanings between staff and students or between students themselves. Assessment criteria must be assessable at varying standards by tutors or lecturers, eg. it is inappropriate to use academic honesty of actual student performance as a criterion as it cannot be graded. Weighting of criteria can be achieved by adding criteria of equal value rather than by weighting single criteria of unequal value. In this way, the colour coding of graduate attribute categories reinforces emphasis.

Second, what constitutes feedback from staff to students needs addressing. Students did not necessarily recognise the slider positions against each criterion as giving them feedback on their performance nor about their strengths and weaknesses on that criterion. When their self-assessment was higher than the tutor/lecturer's, staff feedback on that criterion indicated what they needed to improve. Some students who saw an empty comments box felt that they had received no feedback. It may be advisable not to display the comments box when none are entered. The ability for comments to be attached to each criterion will also be considered.

Many students in one cohort did not recognise the grade for their whole assessment as feedback, wanting instead their numerical mark. To encourage students to take a standards-based approach in lieu of the usual norm-referencing, assessment feedback via ReView will in future be released using only grades. However, given University policy concerning the provision of marks to students on all assessments, a decision has been made that marks will be released three days after the feedback in ReView. It is therefore important in future software design to easily populate the Blackboard gradebook with marks to comply with this marks release policy.

Third, and in regard to the second aim of the study, students elected to self-assess their work and the evidence appears to indicate that they were thoughtful in their own gradings against criteria. Making self-assessment obligatory may well increase the value of ReView for student learning. Options to consider are whether student self-assessment can be undertaken several times, formatively and summatively, and then locked in after marking. Another option is whether a student's self-assessment is visible to the marker, as some students were concerned about its availability influencing markers. The ReView survey indicated that students could benefit from some training on the use and functions of ReView. This training could be undertaken once an online introduction is developed for students to use themselves.

There were also a number of further outcomes as a result of this study. First, a curriculum alignment table has been extended by one of the lecturers in this study to include the assessment criteria on each assessment task http://teaching.econ.usyd.edu.au/UoS/library/CLAW2205Chart.doc. This table has been adapted for use in a range of other units and is being disseminated for adaptation by other staff more widely through the Faculty's Unit of Study website. Second, a set of assessment criteria was developed, trialled and evaluated to provide a databank/exemplars for academics to draw on. Third, a set of steps for the use of ReView was developed and documented. A range of resources to support academics using ReView (technical instructions, student instructions, UoS statements, evaluation survey) were developed, tested and are now available for subsequent use.

This study has been expanded in association with three other universities, and now forms part of an ongoing investigation with University of Technology Sydney as the lead institution, through a two year Carrick Priority Projects Grant. As such, collaborative research into practices that can integrate development of generic attributes with assessment tasks whilst developing students' capacity to self-assess their work will be addressing some of the challenges at Faculty and University level to improve assessment practices.

References

Assiter, A. (1995). Transferable skills: A response to the sceptics. In A. Assiter (Ed.), Transferable Skills in Higher Education. London, UK: Kogan Page Ltd.

Australian Industry Group's report (2006). World class skills for world class industries: employers' perspectives on skilling Australia, Australian Industry Group, Sydney.

Barrie, S. (2004). A research-based approach to generic graduate attributes policy. Higher Education Research and Development. 23 (3) 261 – 275.

Bath, D.; Smith, C.; Stein, S. & Swann, R. (2004). Beyond mapping and embedding graduate attributes: bringing together quality assurance and action learning to create a validated and living curriculum, Higher Education Research & Development. 23 (3) 313-328.

Biggs, J. (1999). Teaching for Quality Learning at University, The Society for Research into Higher Education, Buckingham.

Business, Industry and Higher Education Collaboration Council. (2007). Graduate employability skills. Graduate Employability skills report. Retrieved October 17, 2007 from http://www.dest.gov.au/sectors/hig...

Boud, D.; Cohen, R. & Sampson, J. (Eds.) (2001). Peer Learning in Higher Education: Learning with and from each other. London: Kogan.

Business Council of Australia (2006). Report: New Concepts in Innovation: The Keys to a Growing Australia. PDF report downloaded 7.02.2007 http://www.bca.com.au/Content.aspx?ContentID=100408

Drew, S.; Thorpe, L. & Bannister, P. (2002). Key skills computerised assessments. Guiding principles. Assessment and Evaluation in Higher Education. 27 (2) 175-186.

Harvey, A. & Kamvounias, P. (2008). Bridging the implementation gap: a teacher-as-learner approach to teaching and learning policy. Higher Education Research and Development. 27 (1) 31-41.

Hoban, G.; Lefoe, G.; James, B.; Curtis, S.; Kaidonis, M.; Hadi,M., Lipu, S.; McHarg, C. & Collins, R. (2004). A Web Environment Linking University Teaching Strategies with Graduate Attributes. Journal of University Teaching and Learning Practice. 1(1) 10-19.

Kember, D. & Leung, D.Y.P. (2005). The Influence of Active Learning Experiences on the Development of Graduate Capabilities. Studies in Higher Education. 30 (2) 155-170.

Mayer, E. (1992). Putting Education to Work; The Key Competencies report. Melbourne: Australian Education Council and Ministers of Vocational Education, Employment and Training.

McLachlan, J.C. (2006). The relationship between assessment and learning. Medical Education 40 (8), 716–717. Blackwell Publishing, Inc.

Nunan, T. (1999). Graduate qualities, employment and mass higher education, paper presented at the Higher Education Research and Development Society of Australasia Annual International Conference 1999: Cornerstones: What do we value in Higher Education? University of Melbourne.

Ramsden, P. (2003). Learning to Teach in Higher Education, 2nd edn, Routledge, London.

Rust, C.; O'Donovan, B. & Price, M. (2005). A social constructivist assessment process model: how the research literature shows us this could be best practice. Assessment and Evaluation in Higher Education. 30 (3) 233-241.

Thompson, D. (2006). E-Assessment: The Demise of Exams and the Rise of Generic Attribute Assessment for Improved Student Learning in Self, Peer and Group Assessment in E-Learning ed. Roberts, T. Idea Group USA.

Treleaven, L. (1994). Making a space: Collaborative inquiry as staff development in Participation in Human Inquiry, ed. P. Reason, SAGE London pp. 138-162.

Wright, P. (1995). What Are Graduates? Clarifying The Attributes of 'Graduateness'. The Higher Education Quality Council (HEQC): Quality Enhancement Group.

Acknowledgements

The financial support received from the Office of Learning and Teaching, Faculty of Economics and Business, University of Sydney, for this research is gratefully acknowledged. We also thank Jarrod Ormiston for his excellent research assistance.